🎓 a primer on GPT-3

This post has been co-written, by yours truly and in-part by an AI language model - see if you can decipher the authors :)

I thought I’d write about something slightly different than previously - language models and OpenAI’s GPT-3 model in particular - as recent step-change advances in NLP seem to be opening up a large number of use cases and potential for a new platform to be built. If you have recurring nightmares about our AI overlords than you should stop reading.

what is gpt-3?

OpenAI recently launched the GPT-3 general language model, a follow up to their two prior GPT models and comparable to language models by companies such as Facebook, Nvidia and Microsoft (annotated in graph below).

GPT-3 is the largest language model ever created, with 175 billion parameters, versus Microsoft's Turing-NLG at 17 billion parameters. The model was trained on an uncategorised corpus of 499 billion tokens (/data points), which is comprised of a filtered crawl of the internet, Wikipedia and a large body of English language books.

The current model is 116x larger than OpenAI’s prior GPT-2, and should not merely be thought of as a quantitatively larger “tweak yielding” model but as qualitatively more powerful across a number of dimensions. Most importantly, GPT-3 displays eerie learning and partial reasoning capabilities based on very little textual input - this is to say the model can “meta-learn” and provide outputs based on very few prior examples.

"It's still magic, even if you know how it's done" - Terry Pratchett

background on the model’s overlords, openai

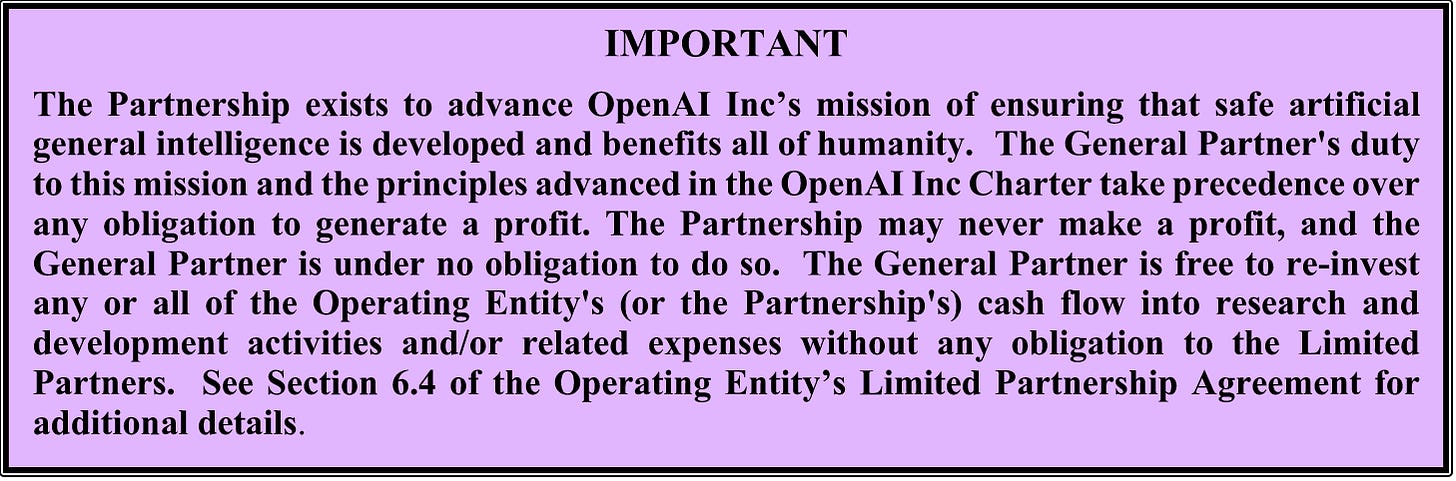

OpenAI was founded as a non-profit artificial general intelligence research lab in 2015, by Greg Brockman (CTO), Elon Musk, Sam Altman (CEO), Ilya Sutskever (CSO) amongst others. The group’s explicit goal has been:

“OpenAI’s mission is to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity” - OpenAI Charter

In February 2018, OpenAI received an investment of $1bn from Microsoft, in addition to prior donations and investments from the likes of Khosla Ventures and Reid Hoffman. In 2019, OpenAI went from being a non-profit to a "capped-profit" company - meaning any profits generated above a 100x return from OpenAI LP, will be passed on the OpenAI charitable trust. Interpret that as you may. Whilst the limitations of a non-profit organisation is evident, especially when compute costs are punitively high, it seems there is mounting evidence that OpenAI will monetise this technology.

what makes gpt-3 different?

GPT-3 shows that language model performance scales as a power-law of model size, dataset size, and the amount of computation

Few-shot learning - Interestingly, the model’s zero or few-shot inference capabilities, mean that the model doesn’t need to be shown hundreds or thousands of prior examples before being able to understand what to do. Humans are able to identify, learn or understand new objects and tasks, from very few prior examples using reasoning, this is effectively zero-shot to few-shot learning. The potential 'killer use case' of this technology is the ability to train the AI system to undertake a task, without any pre-defined examples or tuning

One model, many uses - though debatable, there is some evidence to say that the model is starting to reason, versus just being incredibly adept at search, classifying and outputting information. It's memorising concepts in a relatively fuzzy way, such as grammar constructs and stylistic elements, which is the first step towards generalisation. Additionally, and we’ll get into this later on, the model’s capabilities scale seemingly well across a fairly wide array of use cases

Scalability - whilst this model is an order of magnitude larger than previous models, it still seems as if the model is underfitted, the training curve isn’t converged and they haven’t been through a full training epoch on the training corpus. This would suggest that this type of model architecture, transformers, are able to scale by another few orders of magnitude in size

I’m looking to extend the readership, so if you’re enjoying this, please do share with friends and colleagues

limitations of the model

Nefarious use - an important consideration with language models is whether they’re used bad actors, given the ability to create mass-scale disinformation. Interestingly, GPT-2’s initial release was gated due to these ethical concerns - though OpenAI have forgone this limitation given they found no “strong evidence of misuse”

How the model learns - unlike humans, the model has a lack of intuition, though much of the generated text reads extremely well, the model only passes the Turing test on a few functions, such as instances of common sense and trivia. What is evident when reading large bodies of text is that the model doesn't have a comparable sense of human-like intuition, in that it has no "real" basis for understanding how the world works, be it fundamentals of physics or logic.

Cost - currently, given the cost of building and training this model (estimated at $4.6m for the compute alone) - it’s likely cheaper to hire a human for certain narrow known tasks

commercialisation

There are a number of existing use cases already implemented by organisations using the OpenAI GPT-3 API closed beta, as well as a number of independent developers, researchers and hobbyists.

The obvious use case for this kind of NLP is text generation and semantic search - which you’ve likely previously experienced through Google’s search and Gmail products. Though it’s hard to overstate that GPT-3 isn’t merely doing tree-based search to derive the next word, it is capable of producing completely original works of writing and creativity - this has profound effects on what the model might be harnessed to do in the future.

Creativity: and evidence of it, is largely heralded as a defining chasm to be crossed between symbolic AI and some forms of AGI. Without getting into the philosophy of ‘true creativity’, it seems evident that to some extent GPT-3 is creating original works:

Poetry, fiction and creative writing - online user Gwern has generated a large body of works using the API, from humour, poetry, art criticism and even writing continuations of tech advice posts in the style of Paul Graham.

Debate - the prior GPT-2 model has been unleashed on a subReddit (r/SubSimulatorGPT2), where all the bot participants are powered by the model itself. The results are interesting and debatably *not worse* than much of the content you see on Reddit from humans:

side note: kinda depressing when an AI is funnier than you

Code generation: GPT-3 is adept at writing code based on very few inputs (as per the example at the start of the post) and in a multitude of languages such as Javascript or Python. This will undoubtedly have widescale implications on programming

Whilst many of these use cases are “fun”, toy-like - how might these transpose to more serious commercial uses?

Thanks to Hugging Face (👋🏼 Clement) for sharing some of their use cases / 🤗 used to protect identities

Journalism: we're already seeing the first instances of the lower-end of media content creation being eroded. Recently, Microsoft replaced some of its human journalists with auto-generated text using language models. Example below:

(model generated text in bold, human input in greyscale)

potential future use cases - platform?

I think the most interesting thing about this technology is that the future use cases are likely unimaginable - in the same way that it was unimaginable that a GPS chip in an iPhone would product the ability to order a taxi or that high-speed internet penetration allowed the creation of online influencers.

Platform - creation usually happens through the convergence of multiple technologies, each in turn, accelerating the wave of adoption. There are a number of adjacent technologies for NLP, from API to the silicon layers of the stack - such as the declining cost of compute, the development of faster GPUs and the emergence of Python as the most popular coding language. Recently, language models have been beating benchmarks nearly as fast as they’re being created. This acceleration is evidenced with these historical benchmarks being beaten every 16 months, but with approximately half the compute power (some type of law?).

Whilst current NLP technology is following a familiar pattern of initially underserving most users needs by being feature-like, we will likely see developers build more productised, stand-alone products as these technologies converge. This is not an exhaustive list but merely a jump-off of ideas on how this tech might be used in the future:

Relationships + Personalities - with GPT-3’s replication of human-like capabilities, the ability to spoof machine-human interactions is close. How does a perception shift of reality, personality and even potentially opinion affect how we perceive personalities online? We’ve already seen synthetic influencers, such as Miquela and Shudu, develop into online celebrities. Last year, The Economist interviewed GPT-2 and asked it about the future of AI and technology. We can also imagine using this technology to ‘speak with’ certain historical characters, using their known works as a training set. Imagine speaking with a lost relative or William Shakespeare!?

Chat bots + Customer Service - might we be able to finally power usable conversational interfaces? For example, voice assistants such as Alexa or Siri, which it's safe to say do not have intuition, could benefit from this. Speech-to-text is still complex given limitations of real-world usage such as background noise and accent - though language models built on these systems will improve the UX by an order of magnitude.

Professional Services and Advice - A number of key tasks undertaken by practitioners such as lawyers, accountants, and consultants is effectively to collect, summarise and create textual knowledge based on a narrowly defined body of text or learning - be it local laws, GAAP accounting or the fundamentals of business strategy (early example of data collection and analysis being outsourced to GPT-3 within Excel). Might some subsets of these jobs or tasks be replaced soon? (credit to Rodolfo for this one)

Gaming + entertainment: the ability to generate scenarios or content on the fly, and based on players’ in-game decisions, will likely expand the permutations of games to being near limitless. This could also be applied to books, comics and at some point films. For example, AI Dungeon, allows you to play a game which rewrites itself at multiples instances based on the player’s actions, creating an endless game;

Analysis - Much of the world’s predictive capabilities and sentiment is dependent on research analysts’ who use a mosaic of informational tools, both quantitative and qualitative, though are largely reliant on intuition to derive informed guesses. Could GPT-3 be better at market research reports or stock price analysis than a human? (probably tbh)

If you’re building in this space or have interesting ideas on this - HMU!

→ If you want to play around with a generative language model, the easiest way to do that is on Hugging Face's 'Write with Transformer'

→ in fact, if you want to use pre-build language models, there's no better place to start than Hugging Face's open source repo

Thanks for reading!

Sam